Introducing Books2Rec

I am proud to announce Books2Rec, the book recommendation system I have been working for the last couple of months, is live. Using your Goodreads profile, Books2Rec uses Machine Learning methods to provide you with highly personalized book recommendations. Don’t have a Goodreads profile? We’ve got you covered - just search for your favorite book.

What is Books2Rec?

Books2Rec is a book recommender system powered by Machine Learning. It was built for our Big Data Science course @ NYU. The website is fully working, and you can get book recommendations using your Goodreads profile. We aim to continue working on it throughout the summer in order to improve it further.

This post is a continuation of my previous blog post, Adventures with RapidMiner, where we explored the Recommender System implementations of RapidMiner.

Why do we need recommender systems?

Recommender systems is at the forefront of the ways in which content-serving websites like Facebook, Amazon, Spotify, etc. interact with its users. It is said that 35% of Amazon.com’s revenue is generated by its recommendation engine[1]. Given this climate, it is paramount that websites aim to serve the best personalized content possible.

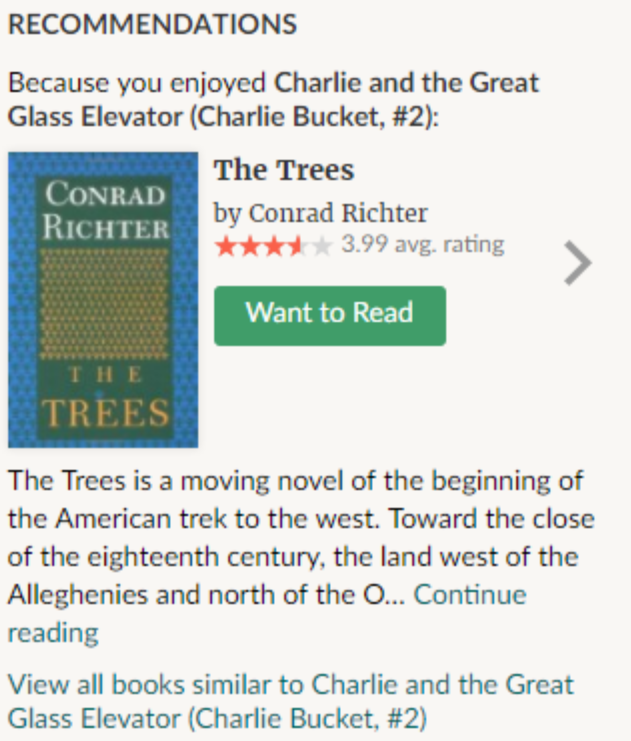

As a trio of book lovers, we looked at Goodreads, the world’s largest site for readers and book recommendations. It is owned by Amazon, which itself has a stellar recommendation engine. However, we found that their recommendations leave a lot to be desired.

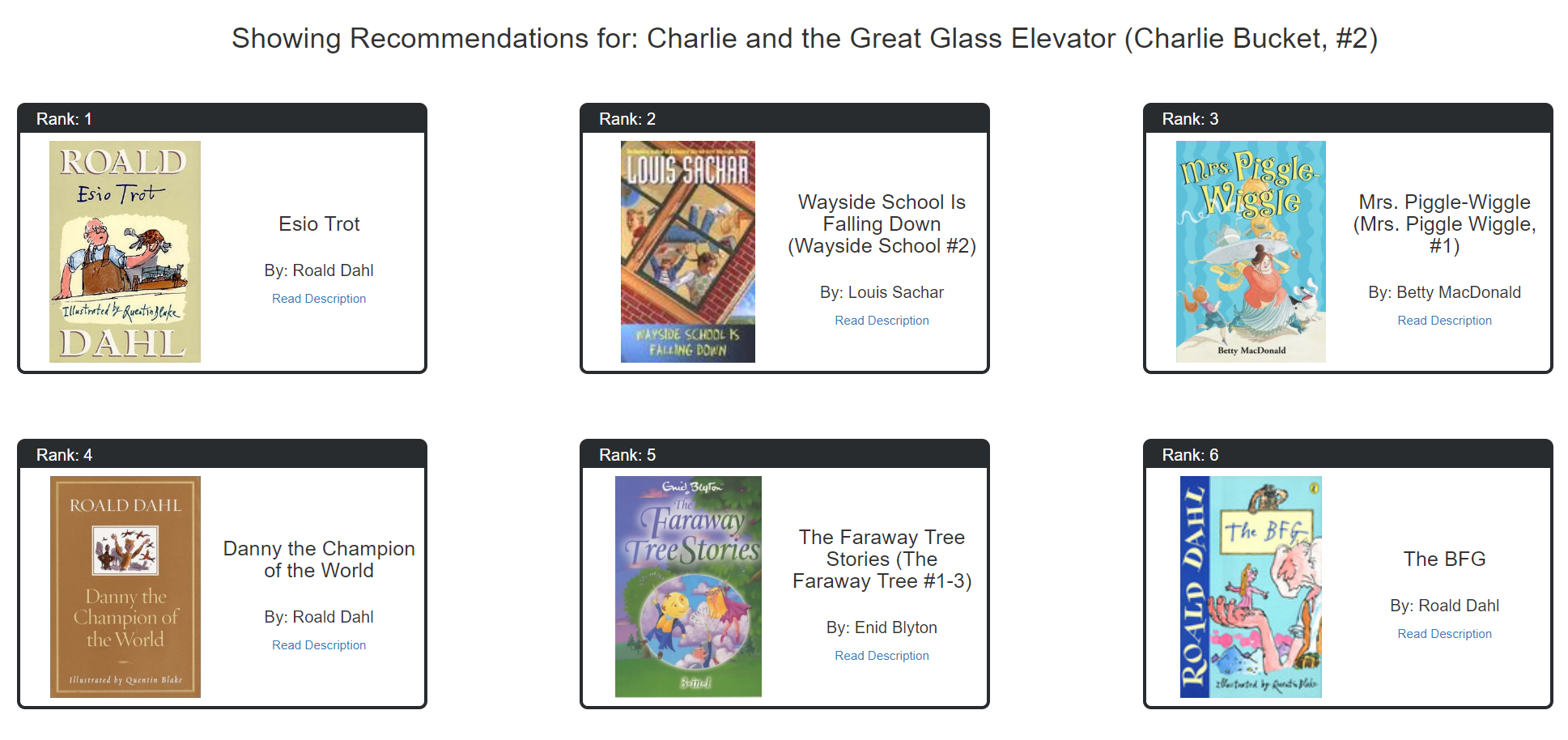

We are using a hybrid recommender system in order to provide recommendations for Goodreads users (ratings and item features).

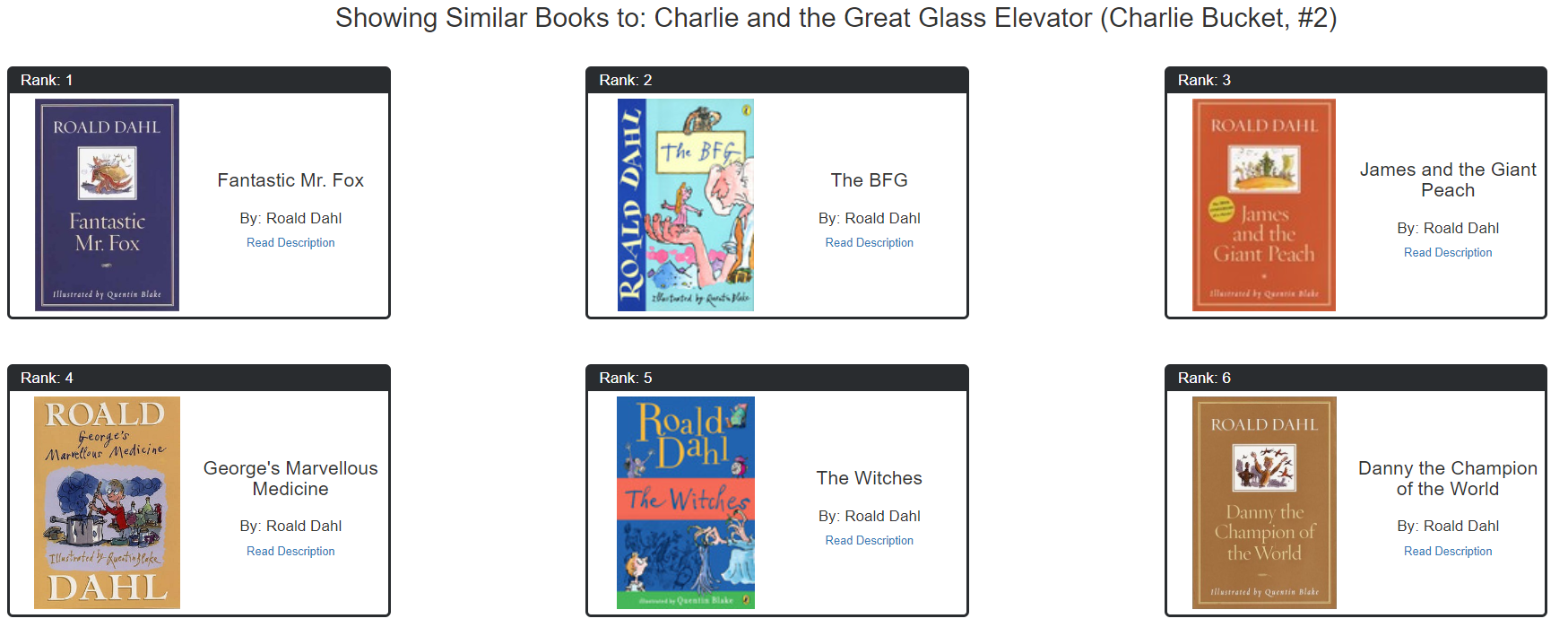

We also provide more ‘traditional’ recommendations that only use the book’s features.

author not being a feature we included in our model.How it Works

We use a hybrid recommender system to power our recommendations. Hybrid systems are the combination of two other types of recommender systems: content-based filtering and collaborative filtering. Content-based filtering is a method of recommending items by the similarity of the said items. That is, if I like the first book of the Lord of the Rings, and if the second book is similar to the first, it can recommend me the second book. Collaborative filtering is a method by which user ratings are used in order to determine user or item similarities. If there is a high correlation of users rating the first Lord of the Rings book and the second Lord of the Rings book, then they are deemed to be similar.

Our hybrid system uses both of these approaches. Our item similarities are a combination of user ratings and features derived from books themselves.

Powering our recommendations is the Netflix-prize winner SVD algorithm[2]. It is, without doubt, one of the most monumental algorithms in the history of recommender systems. Over time, we are aiming to improve our recommendations using the latest trends in recommender systems.

SVD for Ratings Matrix

What makes the SVD algorithm made famous during the Netflix challenge different than standard SVD is that it does NOT assume missing values are 0[3]. Standard SVD is a perfect reconstruction of a matrix but has one flaw for our purposes: if a user has not rated a book (which is going to most books), then SVD would model them as having a 0 rating for all missing books.

In order to use SVD for rating predictions, you have to update the values in the matrix to negate this effect. You can use Gradient Descent on the error function of predicted ratings to accomplish this. Once you run Gradient Descent enough times, every value in the decomposed matrix begins to better reflect the correct values for predicting missing ratings, and not for reconstructing the matrix.

Why Hybrid?

Why would we not just use one, hyper-optimized Latent Factor (SVD) Model instead of combining it with a Content Based model?

The answer is simply a pure SVD model can lead to very nonsensical, ‘black box’ recommendations that can turn away users. A trained SVD model is simply trying to assign factor strenghts in a matrix for each item in order to minimize some cost function. This cost function is simply trying to minimize the error of predicting hidden ratings in a test set. What this leads to is a very optimized model that, when finally used to make recommendations for new users, can spit out very subjectively strange recommendations.

For example, say there is some book A that after being run through a trained SVD model, is most similar in terms of ratings as a book B. The issue is that book B can be completely unrelated to A by ‘traditional’ standards (what the book is about, the genre, etc). What this can lead to is a book like Lord of the Rings Return of the King ending up being most ‘similar’ to a completely unrelated book like Sisterhood of Traveling Pants (yes this happened). This is because it could just be the case that these two books happen to always be rated similarly by users and thus, the SVD model learns to always recommend these books together because it will minimize it’s error function. However, if you ask most fantasy readers, they would probably prefer to be recommended more fantasy books (but not just all other books by Tolkien).

What this leads to is trying to find a balance between exploration (using SVD to recommend books that are similar only in how they are rated by tens of thousands of users) and understandable recommendations (using Content features to recommend other fantasy books if the user has enjoyed the Lord of the Rings books). To solve this issues, we combine the trained SVD matrix with the feature matrix. By doing this, when we map a user to this matrix, the user is mapped to all the hidden concept spaces SVD has learned. Then all the books that model returns are then weighted by how similar they are to the features of the books that the user has highly rated. By doing this, you will get recommendations that are not purely within the same genre that you enjoy, but also not completely oblivious to the types of books you like.

Web App

Our web application is powered by Flask, the easy to use Python web framework. As mentioned above, our website is live for you to test your recommendations with.

Conclusion

This is the second post of a multi-part series on our recommender system. The next part will cover how the SVD algoritm works, and how we can use it to minimize the error, and also how we can use it to serve recommendations.

References

- http://www.mckinsey.com/industries/retail/our-insights/how-retailers-can-keep-up-with-consumers

- The BellKor Solution to the Netflix Grand Prize

- Generalized Hebbian Algorithm for Incremental Latent Semantic Analysis